|

Ching Lam CHOI I'm a second year MIT EECS PhD student at CSAIL, co-supervised by Phillip Isola, Antonio Torralba and Stefanie Jegelka. During my CUHK bachelor's, I researched with wonderful people — Aaron Courville and Yann Dauphin at MILA; Wieland Brendel at Tübingen's Max Planck Institute; Serge Belongie at Copenhagen's Pioneer Centre; CUHK's Hongsheng Li (MMLab), Anthony Man-Cho So, Farzan Farnia and Qi Dou. |

|

ResearchI research injecting structure into multi-modal foundational models. I am interested in cryptographic approaches to AI safety and robustness, such as property testing for certifying model behaviour, one-way functions to safely deploy open-source models, and zero-knowledge proofs to probe (convergent and emergent) multi-agent systems. I am also working towards Platonic world models that reason in multiple modalities, e.g., sim2real for agents to perform experiments following the scientific method. |

|

Fairness Aware Reward Optimization

Ching Lam Choi, Vighnesh Subramaniam, Phillip Isola, Antonio Torralba, Stefanie Jegelka Preprint, 2026 [cite] Reducing LLM bias and toxicity by constraining the reward model to be independent of sensitive attributes, conditional on unrestricted features. |

|

UNI: Unlearning-based Neural Interpretations

Ching Lam Choi, Alexandre Duplessis, Serge Belongie ICLR, 2025 [oral] [cite] Debiased path attribution by perturbing in the unlearning direction of steepest ascent. |

|

On the Generalization of Gradient-based Neural Network Interpretations

Ching Lam Choi, Farzan Farnia Preprint, 2023 [cite] Gradient-based XAI fails to generalise from train to test data: regularisation crucially helps. |

|

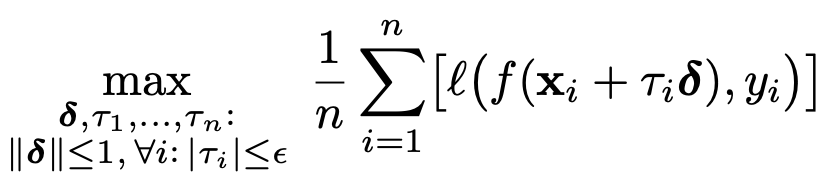

Universal Adversarial Directions

Ching Lam Choi, Farzan Farnia Preprint, 2022 [cite] UAD is an adversarial examples game with a pure Nash equilibrium and better transferability. |

|

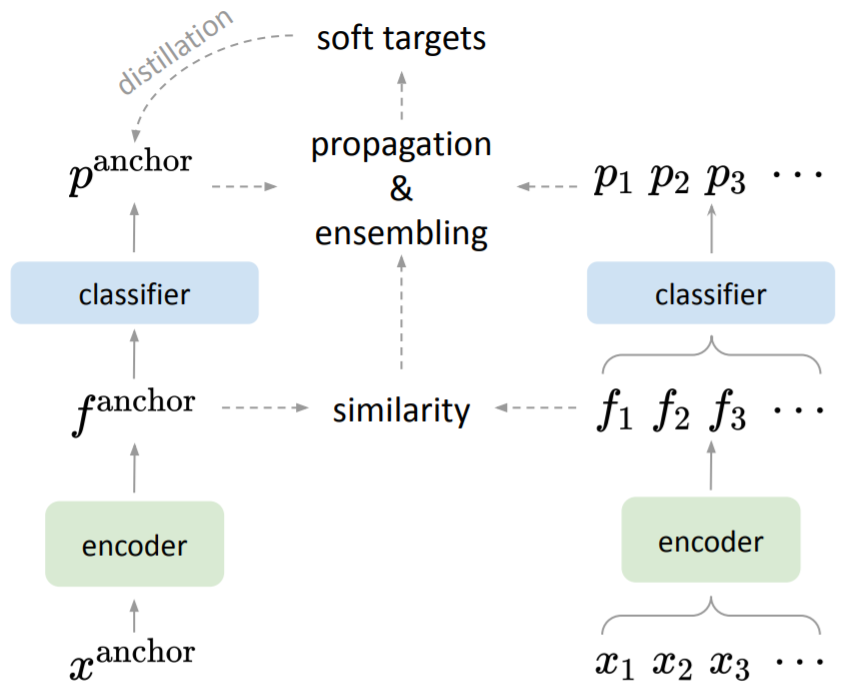

Self-distillation with Batch Knowledge Ensembling Improves ImageNet Classification

Yixiao Ge, Xiao Zhang, Ching Lam Choi, Ka Chun Cheung, Peipei Zhao, Feng Zhu, Xiaogang Wang, Rui Zhao, Hongsheng Li Preprint, 2021 [cite] BAKE distils self-knowledge of a network, by propagating samples similarities in each batch. |

|

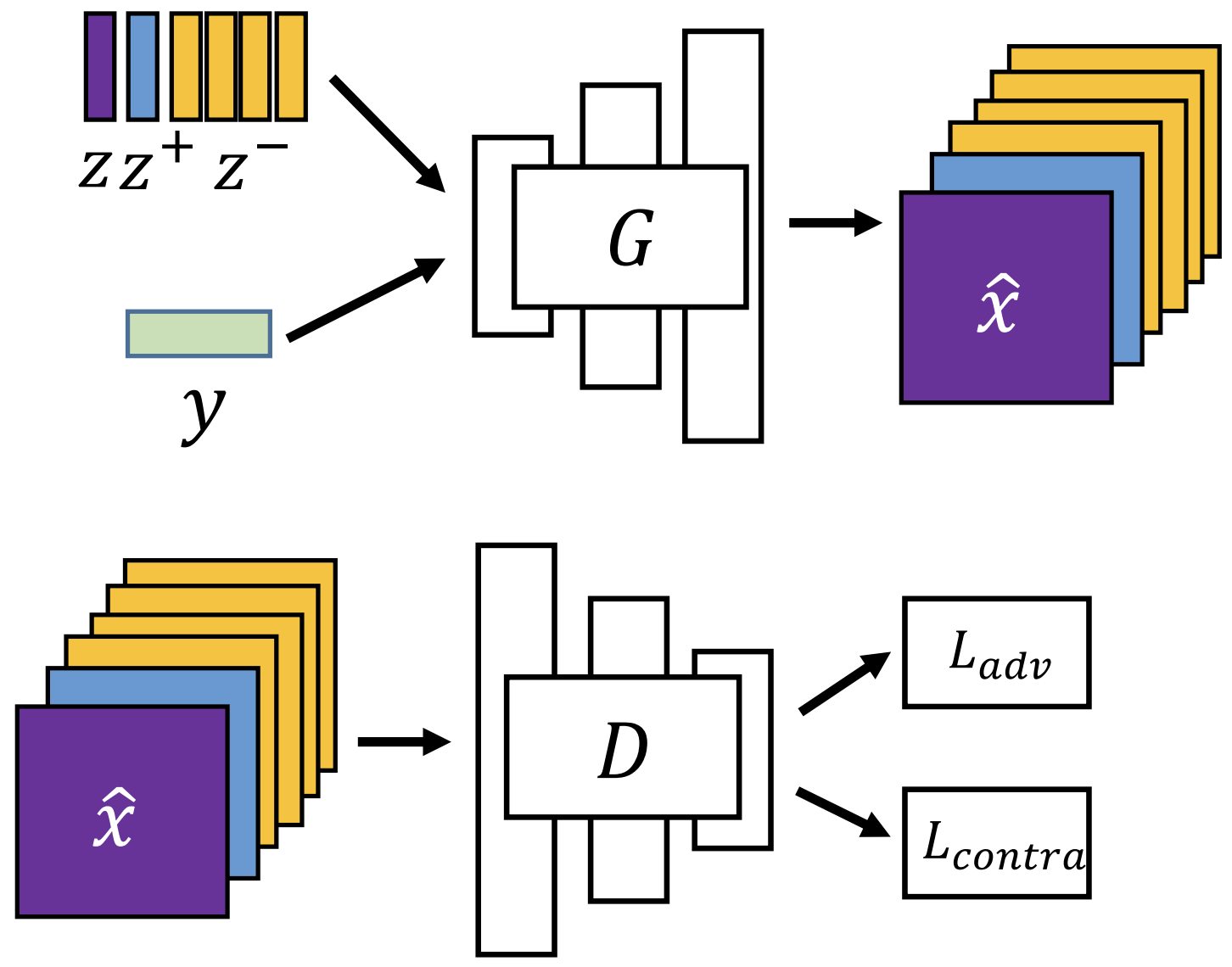

DivCo: Diverse Conditional Image Synthesis via Contrastive Generative Adversarial Network

Rui Liu, Yixiao Ge, Ching Lam Choi, Xiaogang Wang, Hongsheng Li CVPR, 2021 [cite] DivCo uses contrastive learning to reduce mode collapse in GANs. |

News, Talks, Events |

|

[Delegate 18-22.02.2026 @G4G16] Selected as a scholarship recipient and participant of the 16th Gathering for Gardner conference!

[Delegate 13-19.09.2025 @HLF 2025] Selected as 1 of 200 young scientists around the world to meet Fields Medalists, Turing awardees and other distinguished researchers in maths and computer science the at Heidelberg Laureate Forum (HLF) 2025. [Talk 20.08.2025 @HKU IDS Seminar] "Seeing Beyond the Cave: Asymmetric Views of Representation" at HKU Musketeers Foundation Institute of Data Science, University of Hong Kong, hosted by Xihui Liu. [Talk 14.08.2025 @UCPH ML Section] "Seeing Beyond the Cave: Asymmetric Views of Representation" at ML Section, DIKU, University of Copenhagen, hosted by Amartya Sanyal. [Talk 13.08.2025 @P1] "Seeing Beyond the Cave: Asymmetric Views of Representation" at P1 Guest Talks, Pioneer Center for AI, Copenhagen, hosted by Serge Belongie. [Talk 07.03.2025 @WiDS Cambridge] "Fairness Aware Reward Optimization" at Women in Data Science Cambridge oral/poster session, hosted by WiDS Cambridge. [Talk 04.02.2025 @ISTA] "A Causality-inspired Critique of LLMs" at Locatello Group, hosted by Francesco Locatello. [Talk 16.01.2025 @EPFL] "Fairness Symmetries for Preference Optimisation" at Bunne Lab, hosted by Charlotte Bunne. [Talk 12.12.2024 @NeurIPS 2024] "WiML Mentee Spotlight" at Bridging the Future (NeurIPS 2024), representing Women in Machine Learning (WiML). [Talk 27.05.2024 @Cambridge] "(Un)learning to Explain" at Krueger AI Safety Lab, hosted by David Krueger. [Talk 22.03.2024 @Vector/UToronto] "Robust Scaling: Trustworthy Data" at CleverHans Lab, hosted by Nicolas Papernot. [Presentation 08.05.2023 @MPI-IS] "Robustness Transfer in Distillation" at Brendel & Bethge joint lab meeting, hosted by Wieland Brendel. [Talk 16.02.2022] "Federated learning and the Lottery Ticket Hypothesis" at ML Collective lab meeting, hosted by Rosanne Liu. [Talk 31.07.2020] "Julia Track Google Code In and Beyond" at JuliaCon 2020. [Talk 24.07.2020] "Corona-Net: Fighting COVID-19 With Computer Vision" at EuroPython 2020. [Talk 13.06.2020] "Julia – Looks like Python, feels like Lisp, runs like C/Fortran" at Hong Kong Open Source Conference 2020. |

|

[Co-organiser] New in ML Workshop @NeurIPS 2025

[Co-organiser] ML Tea @MIT CSAIL (seminar series) [Co-organiser] Causality and Large Models Workshop @NeurIPS 2024 [Co-organiser] New in ML Workshop @NeurIPS 2024 [Co-organiser] New in ML Workshop @NeurIPS 2023 [Co-organiser] CoSubmitting Summer Workshop @ICLR 2022 [Co-organiser] Undergraduates in Computer Vision Social @ICCV 2021 [Reviewer] CVPR 2023, 2024; ICCV 2023; NeurIPS 2023, 2024; ICLR 2024, 2025; ICML 2024, 2025; ECCV 2024*; ACCV 2024; AAAI 2025; AISTATS 2025 (* indicates outstanding reviewer award). |

Hobbies

Stephen Fry: "We are not nouns, we are verbs. I am not a thing [but] a person who does things." |

|

Sports and Nature

I boulder, climb, hike, fence, play volleyball and would open a climbing gym called “Sloblock”. |

|

Music

I adore the cello, oboe, French horn, voice and violin. Favourite artists include the Berliner Philharmoniker; the 12 Cellists of the Berlin Phil, The King's Singers; Tom Rosenthal, Joni Mitchell; Elgar, Haydn, Dvořák, Samuel Barber; Augustin Hadelich, Hilary Hahn; Jacqueline du Pre, Bruno Delepelaire, Yo-Yo Ma, Nathan Chan; Jonathan Kelly; Daniel Barenboim, Cornelius Meister. |

|

Literature

I travel with comfort books and particularly adore Stefan Zweig ("Chess", "The World of Yesterday"), Franz Kafka ("The Castle"), Jan Morris ("Trieste and The Meaning of Nowhere"), Patti Smith ("Just Kids"), Patrick Leigh Fermor ("A Time of Gifts"), George Orwell ("Why I Write"), Paul Valéry ("Dialogues"), 吳明益 ("天橋上的魔術師"), Deborah Levy ("Things I Don't Want to Know"), Milan Kundera ("The Book of Laughter and Forgetting"). I am on Goodreads. |

|

Website design credits to Jon Barron. |